|

Previous Research and its Significance Outline Previous Research and its Significance OverviewThe major milestones of my research include the development of:

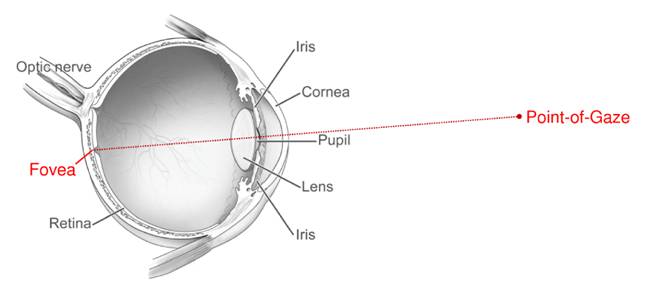

In the following sections I provide some formal definitions, touch on applications areas of eye tracking, and elaborate on my previous research and its significance. DefinitionsWhen a person with normal vision examines an object, his/her eyes are oriented in such a way that the image of the object falls on the highest acuity region of the retina (fovea) of each eye. The point-of-gaze (i.e., where a person is looking) can thus be formally defined as the point within the visual field that is imaged on the center of the fovea (Fig. 1).

Fig. 1: Schematics of the eye showing the fovea and the point-of-gaze.

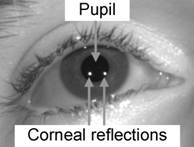

Systems that determine the point-of-gaze are referred to in a variety of ways, such as point-of-gaze estimation systems, gaze estimation systems, gaze trackers, eye-gaze trackers or eye trackers. The term remote is used to indicate that the system operates at a distance from the subject (as opposed to head-mounted) and it is normally assumed to mean that there is no contact with the subject. However, there are cases in which the system per se is at a distance from the subject but there is something making physical contact with the subject (e.g., a chinrest/headrest, a tracking marker). To avoid any possible ambiguity, I often use the terms remote and non-contact together, as in the title of my PhD thesis [T3]. In this document remote implies non-contact. Application AreasMany brain processes, such as visual system functions as well as cognitive and emotional processes, govern the way the eyes move and look at the world. The analysis of eye movements and visual scanning patterns can thus be used in a large variety of applications such as the study and assessment of normal and abnormal functions of the visual and oculomotor systems, the study and assessment of mood, perception, attention and learning disorders, the analysis of reading and driving behavior, the assessment of pilot training, and the evaluation and optimization of GUI, website and advertisement design. The voluntary control of the eyes can serve as an input modality in human-computer interfaces for applications such as gaming, interactive/contingent displays (e.g., in an operating room, in telepresence, in a virtual museum), and assistive technologies for severely motor-impaired persons. Such assistive technologies can enable their users to execute any computer or computer-mediated task, including communicating, controlling their immediate home environment (e.g., lighting, temperature, TV, audio system, etc.), and steering a motorized wheelchair. Previous Research and its SignificanceFor decades, the remote eye tracking field has been dominated by methodologies/technologies that are based on the centers of the pupil and corneal reflection(s) extracted from images captured by video cameras. In this context, a corneal reflection (technically, the first Purkinje image, sometimes also referred to as corneal reflex or glint) is the virtual image of a near-infrared light source that is used to illuminate the eyes (near-infrared light is used because it is invisible to the human eye). This virtual image, which appears as a bright spot (Fig. 2), is formed by reflection of the light source at the front surface of the cornea (the front surface of the cornea resembles a convex mirror).

Fig. 2: Close-up eye image where the pupil and two corneal reflections are indicated (corresponds to the system in Fig. 3 (a))

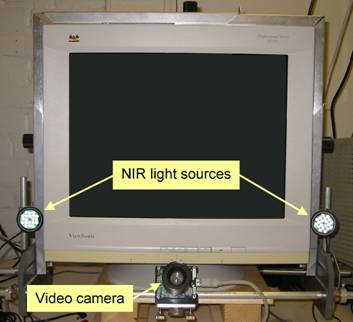

When I started my graduate studies in 2000, existing remote eye tracking technologies were based on black-box 2-D mapping techniques that exhibited very poor performance in the presence of head movements unless complex motion compensation methodologies were used. Often, such motion compensation methodologies were not fully successful and involved extra components such as ultrasonic distance sensors and/or moving parts such as autofocus and/or zoom lens systems. The focus of my Master’s program was then to work on the head movement problem. Instead of working on yet another motion compensation technique, I decided to develop a remote eye tracking methodology that would inherently account for head movements. With this in mind, based on the principles of geometrical optics, I developed, from scratch, a 3-D mathematical model of the human eye and a system with one video camera and two light sources. Using this model, it is possible to determine the position and orientation of the eye in 3-D space and reconstruct the visual axis of the eye. The point-of-gaze is then determined as the intersection of the visual axis with the 3-D scene [T2]. The resulting remote eye tracker (Fig. 3 (a)) was, to the best of my knowledge, the first non-contact system reported in the literature that could estimate the point-of-gaze accurately in the presence of head movements using only eye images captured by a single fixed camera with a fixed lens. This system, which achieved maximal performance with minimal system complexity, could estimate the point-of-gaze with a root-mean-square error of less than 1º of visual angle (comparable to the best commercially available remote eye trackers) [C5], [J2]. This eye tracker was also one of the first working systems to use a 3-D mathematical model.

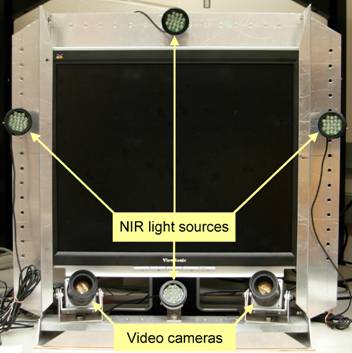

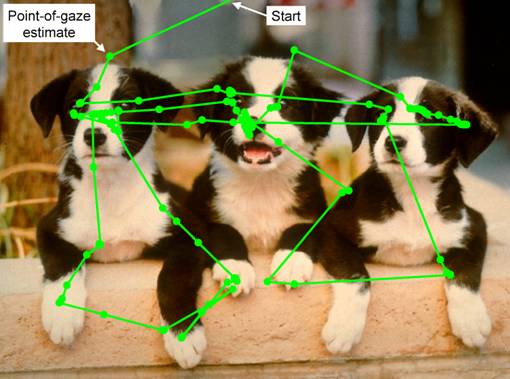

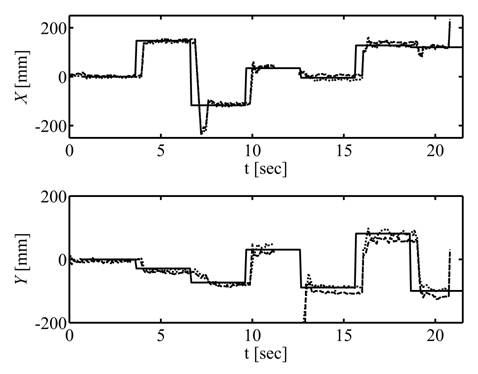

The single-camera system developed during my Master’s program, similarly to the best commercially available remote eye trackers, has to be calibrated for each subject through a one-time procedure that requires the subject to fixate multiple points in the scene. A multiple-point calibration procedure, however, can be an obstacle in applications requiring minimal subject cooperation such as those with infants and mentally challenged individuals. The focus of my PhD program was then to minimize the personal calibration requirements. As a first step, I generalized the mathematical model, leading to the “General theory of remote gaze estimation using the pupil center and corneal reflections” [J2], which, according to Google Scholar, has been cited 267 times as of October 2015. This general theory covers the full range of possible system configurations, from the simplest, that includes one video camera and one light source, to the most complex, that include multiple cameras and multiple light sources. This article provides fundamental insight into the limitations and potential of each system configuration, particularly regarding the tolerance to head movements and the personal calibration requirements. This work, together with parallel work by three other research groups, had a significant impact in the field of remote eye tracking, shifting the field from 2-D black-box mapping techniques sensitive to head movements to 3-D model-based gaze estimation methods that are insensitive to head movements. This general theory was significantly expanded in my PhD thesis [T3], becoming the most complete analysis of the problem of estimating the point-of-gaze from the pupil and corneal reflections using a 3-D model. The mathematical model in the general theory is used not only to estimate gaze but also as the basis of a simulation framework. This simulation framework is an invaluable tool for studies of the sensitivity of the point-of-gaze estimation to several different types of error as a function of the system configuration. Such studies provide valuable insights and eventually result in system design guidelines, including the optimization of the geometry of the system setups. The results of my PhD research led to a new eye tracker that uses two fixed video cameras with fixed lenses (Fig. 3 (b)) and has a very quick and simple personal calibration routine requiring fixation on a single point for about 2 seconds [C8], [C10], [C13], [C14]. Such calibration routine can be performed easily with infants [C10]; note that this system can measure relative eye movements without the need for personal calibration [C15]. This system was successfully tested with adults and infants (Fig. 4, Fig. 5). The tests with adults exhibited a point-of-gaze estimation accuracy of 0.4-0.6º of visual angle in the presence of head movements [C13]. Such accuracy is comparable to that of the best commercially available systems, which use multiple-point personal calibration procedures. Furthermore, to the best of my knowledge, this point-of-gaze estimation accuracy is significantly better than that of any other working systems described in the literature that use a single-point personal calibration routine. The ability of this technology to record infants’ visual scanning behavior can serve many applications that otherwise rely on subjective measurements performed by trained human observers. Examples of such applications are the study of the development and objective assessment of functions of the visual and oculomotor systems, the objective determination of attention allocation, and the study of cognitive development.

Fig. 4: Visual scanning pattern of an adult subject, recorded after completing a single-point personal calibration routine. A real-time video corresponding to this recording can be found here.

While the central part of my work was the 3-D mathematical model used to estimate the point-of-gaze, my expertise extends to every aspect of the development of the eye tracking technologies. For example, I selected the video cameras, their optics and the near-infrared light sources, built electronics for the control of the light sources, developed and implemented some of the image processing algorithms, created system and personal calibration procedures (system calibration includes full camera calibration), designed a core part of the real-time multithreaded architecture of the eye tracking application (in C/C++ under Microsoft Windows), and designed, built and tested prototype systems. Throughout my work I collaborated with several fellow graduate students and supervised many undergraduate students who focused on the development, implementation and testing of most of the image processing algorithms and the eye tracking application. I also worked side-by-side with the staff from the machine shop on the machining of many of the parts for the prototype systems. During my postdoctoral program I worked on gaze-based assistive technologies for communication and control for severely motor-impaired individuals with normal cognitive functions and voluntary control of their eyes. The output of a single-camera remote eye tracker (based on the methodologies developed during my graduate program) was used to position the mouse pointer wherever the user was looking at on a computer screen, while different methods were used to generate the mouse “clicks”. The most commonly used method to generate mouse clicks with gaze is dwell time: the user has to look at the item of interest for a given amount of time. However, a low dwell time threshold can result in frequent unintended actions (this is known as the Midas’ touch problem), whereas a high dwell time threshold implies higher delays and lower command rates. Our initial approach to overcome the Midas’ touch problem was to use a signal from a non-invasive EEG-based brain-computer interface to generate the mouse clicks. However, the command rate of the brain-computer interface was too low for practical use so it was replaced with a “tooth-clicker” device. This non-invasive, lightweight, discrete and wireless device detects the vibration that is elicited when the user gently clenches his/her jaw (this action is referred to as a “tooth-click”). A pilot gaze typing study using a virtual keyboard showed that the tooth-clicker can allow for relatively high command rates (up to 60 characters/min) that are comparable to those attained with short dwell times, while overcoming the Midas’ touch problem [C16]. The novel remote eye tracking methodologies that I developed went beyond the prototypes that I built during my graduate studies. Currently, there are several eye trackers based on my work (both single-camera and two-camera systems) that are being used in a variety of research projects at Ontario universities and hospitals.

|

||||||||||||||