How granulation works...

You may have heard of the work of the French mathematician Jean-Baptiste Fourier. Fourier came up with a theory which states that any complex vibration (like a sound with a perceivable pitch or pitches) can be understood as a collection of simple vibrations, i.e. vibrations at a single frequency. This is similar to the idea that a given colour can be understood as light waves at a particular frequency or collection of frequencies. (Frequency just refers to the rate at which a thing happens, in the case of sound how fast the air molecules vibrate.) Fourier's work has applications in a wide variety of fields including music, engineering, physics, and computer science.

If you think about the previous example, consider that if you slow down the speed of a sound you must be slowing down the rate of its vibration. So you can see why duration, frequency, and pitch are interelated: They all have to do with time. The vibrations are like a series of waves on the surface of a lake; if the size of the wavecrests increases, you need more space to fit in the same number of them.

Waves are in fact a very common way of thinking about both sound and light. If you know a little about physics, you may have heard of something called the wave-particle duality. This states that light (among other things) can be understood both as waves and particles. So is there something similar for sound?

The answer is sort of: A British scientist named Dennis Gabor came up with a theory which said that any sound can be represented as a collection of very short sounds (particles so to speak), on the order of something around 1/20th of a second in duration. He called these grains. This turned out to be a pretty good way of understanding sound, and had lots of practical applications in computer music, notably in imitating the voice. (The vocal cords actually open and close many times a second, interrupting the stream of air.) Gabor noted that these short grains are like basic elements of sound which can't be reduced to smaller parts. Much shorter and you just have noise. Much longer and they don't fuse together to make a bigger sound. Grains within these thresholds of perception have another interesting property: It doesn't matter to the ear if you play them forward or backwards!

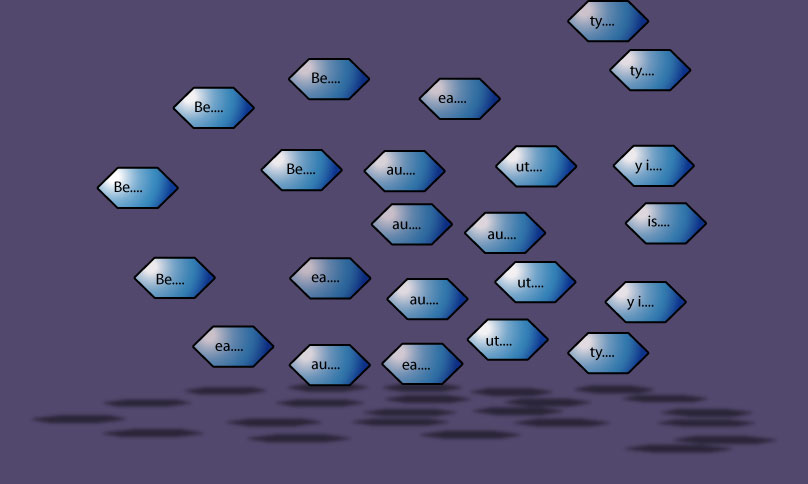

When you granulate a soundfile you essentially copy and play back little bits of it as grains. You can think of these grains as tiny individual soundfiles. The image below illustrates this. (The actual bits of sound would be much shorter than in the example.)

Now as you are producing and playing back these grains, you have to decide where in the soundfile to copy them from. If you start at the beginning and move through the soundfile at about the normal rate until you run out of stuff to copy and play back, you will end up with a composite sound that is about the same duration as the original. Now (and here's the clever part) each of these grains is like its own little soundfile, so if you play the grains back at the normal speed, but move through copying grains at half the speed, you can double the duration of the soundfile without affecting its pitch! What's more, by changing the rate of playback of the individual grains (as was done with the soundfile on the preceding page) but still moving through the soundfile at the normal rate, you can change the pitch without changing the duration! The two aspects of the sound can be controlled with complete independence!

Granulator programs generally layer a number of grains (play back more than one at once) in order to help with the fusion effect. JavaGran actually uses several independent streams of grains. It also turns out that as you move through the original soundfile it helps to copy grains from a small range instead of a single point, and most applications either do this or give you the option.

> Click here to learn about some of the technologies underlying JavaGran...

> Or, if your not interested, move on to the application itself and start making sound!

> What? > Why? How? > Geek Stuff

> Go Beep! > Links > scottwilson.ca > Contact